| Россия |

The Vinum Volume Manager

Optimizing performance

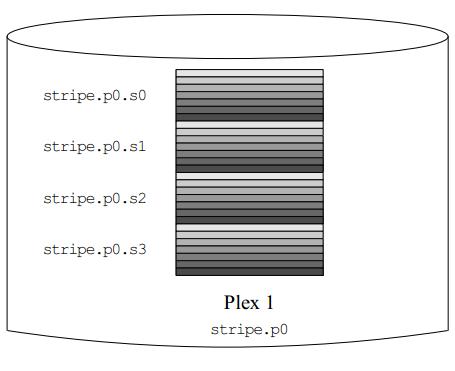

The mirrored volumes in the previous example are more resistant to failure than unmirrored volumes, but their performance is less: each write to the volume requires a write to both drives, using up a greater proportion of the total disk bandwidth. Performance considerations demand a different approach: instead of mirroring, the data is striped across as many disk drives as possible. The following configuration shows a volume with a plex striped across four disk drives:

drive c device /dev/da3s2h

drive d device /dev/da4s2h

volume stripe

plex org striped 480k

sd length 128m drive a

sd length 128m drive b

sd length 128m drive c

sd length 128m drive d

When creating striped plexes for the UFS file system, ensure that the stripe size is a multiple of the file system block size (normally 16 kB), but not a power of 2. UFS frequently allocates cylinder groups with lengths that are a power of 2, and if you allocate stripes that are also a power of 2, you may end up with all in odes on the same drive, which would significantly impact performance under some circumstances. Files are allocated in blocks, so having a stripe size that is not a multiple of the block size can cause significant fragmentation of I/O requests and consequent drop in performance. See the man page for more details.

Vinum requires that a striped plex have an integral number of stripes. You don't have to calculate the size exactly, though: if the size of the plex is not a multiple of the stripe size, Vinum trims off the remaining partial stripe and prints a console message:

vinum: removing 256 blocks of partial stripe at the end of stripe.p0

As before, it is not necessary to define the drives that are already known to Vinum. After processing this definition, the configuration looks like:

4 drives: D a State: up /dev/da1s2h A: 2942/4094 MB (71%) D b State: up /dev/da2s2h A: 2430/4094 MB (59%) D c State: up /dev/da3s2h A: 3966/4094 MB (96%) D d State: up /dev/da4s2h A: 3966/4094 MB (96%) 3 volumes: V myvol State: up Plexes: 2 Size: 1024 MB V mirror State: up Plexes: 2 Size: 512 MB V stripe State: up Plexes: 1 Size: 511 MB 5 plexes: P myvol.p0 C State: up Subdisks: 1 Size: 512 MB P mirror.p0 C State: up Subdisks: 1 Size: 512 MB P mirror.p1 C State: initializing Subdisks: 1 Size: 512 MB P myvol.p1 C State: up Subdisks: 1 Size: 1024 MB P stripe.p0 S State: up Subdisks: 4 Size: 511 MB 8 subdisks: S myvol.p0.s0 State: up D: a Size: 512 MB S mirror.p0.s0 State: up D: a Size: 512 MB S mirror.p1.s0 State: empty D: b Size: 512 MB S myvol.p1.s0 State: up D: b Size: 1024 MB S myvol.p0.s1 State: up D: c Size: 512 MB S stripe.p0.s0 State: up D: a Size: 127 MB S stripe.p0.s1 State: up D: b Size: 127 MB S stripe.p0.s2 State: up D: c Size: 127 MB S stripe.p0.s3 State: up D: d Size: 127 MB

This volume is represented in Figure 12-7. The darkness of the stripes indicates the position within the plex address space: the lightest stripes come first, the darkest last.

Resilience and performance

With sufficient hardware, it is possible to build volumes that show both increased resilience and increased performance compared to standard UNIX partitions. Mirrored disks will always give better performance than RAID-5, so a typical configuration file might be:

drive e device /dev/da5s2h

drive f device /dev/da6s2h

drive g device /dev/da7s2h

drive h device /dev/da8s2h

drive i device /dev/da9s2h

drive j device /dev/da10s2h

volume raid10 setupstate

plex org striped 480k

sd length 102480k drive a

sd length 102480k drive b

sd length 102480k drive c

sd length 102480k drive d

sd length 102480k drive e

plex org striped 480k

sd length 102480k drive f

sd length 102480k drive g

sd length 102480k drive h

sd length 102480k drive i

sd length 102480k drive j

In this example, we have added another five disks for the second plex, so the volume is spread over ten spindles. We have also used the setupstate keyword so that all components come up. The volume looks like this:

vinum -> l -r raid10 V raid10 State: up Plexes: 2 Size: 499 MB P raid10.p0 S State: up Subdisks: 5 Size: 499 MB P raid10.p1 S State: up Subdisks: 5 Size: 499 MB S raid10.p0.s0 State: up D: a Size: 99 MB S raid10.p0.s1 State: up D: b Size: 99 MB S raid10.p0.s2 State: up D: c Size: 99 MB S raid10.p0.s3 State: up D: d Size: 99 MB S raid10.p0.s4 State: up D: e Size: 99 MB S raid10.p1.s0 State: up D: f Size: 99 MB S raid10.p1.s1 State: up D: g Size: 99 MB S raid10.p1.s2 State: up D: h Size: 99 MB S raid10.p1.s3 State: up D: i Size: 99 MB S raid10.p1.s4 State: up D: j Size: 99 MB

This assumes the availability of ten disks. It's not essential to have all the components on different disks. You could put the subdisks of the second plex on the same drives as the subdisks of the first plex. If you do so, you should put corresponding subdisks on different drives:

plex org striped 480k sd length 102480k drive a sd length 102480k drive b sd length 102480k drive c sd length 102480k drive d sd length 102480k drive e plex org striped 480k sd length 102480k drive c sd length 102480k drive d sd length 102480k drive e sd length 102480k drive a sd length 102480k drive b

The subdisks of the second plex are offset by two drives from those of the first plex: this helps ensure that the failure of a drive does not cause the same part of both plexes to become unreachable, which would destroy the file system.

Figure 12-8 represents the structure of this volume.