Optimizing compiler. Interpocedural optimizations

Interprocedural optimization

The presentation can be downloaded here.

How to combine the good programming style and speed requirements for the application?

Good programming style assumes:

A modularity of the source code complicates the task of optimization.

All previously discussed optimizations works on procedural level:

- Optimizations work effectively with local variables

- Every function call is a "black box" with unknown properties

- The procedural parameters properties are unknown

- The global variables properties are unknown

To solve these problems the program has to be analyzed as a whole.

Some basic problems of a procedural level optimizations

-

Scalar optimizations:

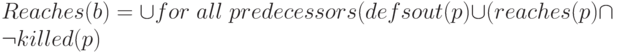

- According to iterative algorithm for data flow analysis

-

- In the case of calling of an unknown function from a basic block p, all local and global variables which can be changed inside this function according to language rules should be put to killed (p).

Compiler needs to know which objects can be changed inside the function according to perform high-quality optimizations.

- Loop optimizations: For high-quality loop optimizations compiler needs:

- Loop vectorization: For successful loop vectorization information on the objects memory alignment can be very useful.

To obtain such information we need to analyze the entire program. This (interprocedural) infromation improves many classic intraprocedural optimizations.

One-pass and multi-pass compilation

In computer programming, a one-pass compiler is a compiler that processes the source code of each compilation unit only once. It doesn’t look back at the previously processed code. Multi-pass compiler traverses the source code or its internal representation several times.

In order to gather the information about a function properties the compiler needs to analyze every function and it’s interconnection with other functions because each them can contain any function calls, as well as itself call (recursion). It is necessary to analyze a call graph. A call graph represents calling relationships between subroutines in a computer program. Each node represents a procedure and each edge (f, g) indicates that the procedure f calls procedure g.

A call graph may be static, calculated at compile time or dynamic. Static call graph contains all possible ways control can be passed. A dynamic call is obtained during the execution of program and can be useful for performance analysis.

One of the main of interprocedural analysis tasks is constructing the call graph and function property determination. For example, the global data flow analysis is intended to compute the objects which can be modified within the function.

Call graph can be complete or incomplete. If an application use utilities from an external library then the graph will be incomplete and full analysis will not be performed.

One-pass and multi-pass compilation actions

When the one-pass compilation is used, the compiler performs the following steps: parsing and internal representation creation, profile analysis, scalar and loop optimizations, code generation. Object files corresponding to sources files are generated. A linker builds the application from these files.

In the case of the multi-pass compilation compiler makes parsing, creates the internal representation, performs some scalar optimizations and saves the internal representation in the object files during the first pass. These files contain packed internal representation for the corresponding source files. It allows to perform the interprocedural analysis and optimizations. During this analysis the call graph is build and additional function properties are collected. The next step is interprocedural optimizations, such optimizations work with the part of the call graph. Finally the compiler performs scalar and loop optimizations. One or several final object files are generated

Main compiler options for interprocedural optimizations

/Qipo[n] enables interprocedural optimization between files. This is also called multifile interprocedural optimization (multifile IPO) or Whole Program Optimization (WPO).

/Qipo-c generate a multi-file object file (ipo_out.obj)

/Qipo-S generate a multi-file assembly file (ipo_out.asm)

/Qipo-jobs<n> specify the number of jobs to be executed simultaneously during the IPO link phase

There is a partial interprocedural analysis which works on single-file scope. In this case some partial call graph is build and the interprocedural optimizations are performed according to information obtained by the graph analysis.

Qip[-] enable(DEFAULT)/disable single-file IP optimization within files

Mod/Ref Analysis

Interprocedural analysis collects MOD and REF sets for each routine. MOD/REF sets contain objects which can be modified or referenced during the routine execution.

These sets can be used for scalar optimizations.

external void unknown(int *a);

int main(){

int a,b,c;

a=5;

c=a;

unknown(&a);

if(a==5)

printf("a==5\n");

b=a;

printf("%d %d %d\n",a,b,c);

return(1);

}

#include <stdio.h>

void unknown(int *a) {

printf"a=%d\n", *a);

}

Let’s consider a simple example. There are two files. Function main contains call of function "unknown" which is located in a other file. Local variable "a" obtains constant value before this call. In case of propagating this constant value through the function call the following if statement can be modified and check can be deleted.

We can use assembler files to define if check if(a==5) was deleted or wasn’t.

icl test.c unknown.c –S

There is this check in this case. Let’s inspect test.asm file .

call _unknown ;9.1 ... .B1.2: ; Preds .B1.8 mov edi, DWORD PTR [a.302.0.1] ;10.4 cmp edi, 5 ;10.7 jne .B1.4 ; Prob 0% ;10.7 icl –Ob0 test.c unknown.c -Qipo–S

With –Qipo check was eliminated. -Ob0 is needed to prevent inlining of unknown.

call _unknown. ;9.1 ... .B1.2: ; Preds .B1.7 push OFFSET FLAT: ??_C@_05A@a?$DN?$DN5?6?$AA@ ;11.3 call _printf ;11.3

Alias analysis

It is used to determine if a storage location may be accessed in more than one way. Two pointers are said to be aliased if they point to the same location.

- explicit aliasing: different objects points to the same memory according to programing language rules (union for C/C++, equivalence for Fortran)

- parameter aliasing: formal argument can be aliased with other formal argument or objects from global scope.

- pointer analysis: pointers can be aliased if sets of objects which can be referenced by these pointers have common elements.

Alias analysis is important to find loop dependences.

Alias analysis example

#include <stdio.h>

int p1=1,p2=2;

int *a,*b;

void init(int **a, int **b) {

*a=&p1;

*b=&p1; // <= a and b poins to p1

}

int main() {

int i,ar[100];

init(&a,&b);

printf("*a= %d *b=%d\n",*a,*b);

for(i=0;i<100;i++) {

ar[i]=i*(*a)*(*a);

*b+=1; /* *a is changed through *b */

}

printf("ar[50]= %d p2=%d\n",ar[50],p2);

}

Dependence may appear if two pointers (a and b) reference the same memory location. In this case any loop optimizations are prohibited.

Other aspects of the interprocedural analysis

Interprocedural analysis is used:

- to determine the function’s attributes. For example, there are attributes "no_side_effect", "always_return", etc. used for simplifying some kind of analysis and optimizations.

- to define an attributes of the variables. For example, if variable have no attribute "address was taken" than it cannot be updated through pointers, it simplifies many optimizations. Whole program analysis is required to handle the global variables.

- for data promotion. Each variable has a scope . IPA allows to reduce this scope according to the real usage.

- to remove unused global variables.

- to remove a dead code. There can be sub graphs in call graph which aren’t connect with program entry. Such sub graphs can be safely removed from the final generated executable.

- to feed the information about the argument alignment. If the actual function arguments are always aligned, then vectorization can be improved for the procedure.